QoS, as any other kind of dimensioning, is really challenge. A perfect QoS deployment depends on a really good understanding of the traffic flow and this is something really hard to achieve. Most, if not, all companies out there, have no idea of what is going on through your cables and fibers or wireless.

But, considering that a company out there wants to apply QoS within your network, it is necessary perform some maths and try to get reasonable values based on the traffic and application in use.

Theoretically, traffic is divided in four categories:

-Internetworking Traffic - Basically routing protocols and others protocols used to run the network

-Critical Traffic - Voice,Video,etc

-Standard Data Traffic - Data Traffic like HTTP

-Scavenger Traffic - Traffic not important and sometimes not desirable.

Technically, things happen very deeply in the packets and frames. Understanding QoS involves a deep understanding of bits functioning on a packet. Therefore, so important as know the function is handle it. Is to be able to change those bits according to Customer requirements in order to shape the traffic.

The traffic needs to be Classified first and classify means mark packets first, make them different from each other, put color on it. The way to do that is changing specific bits within a packet or using Access-List and differentiate the hole packet within a packet flow.

To start a fresh QoS deployment is considered to be a good practice deploy some device on the network able to identify all kind of traffic and generate reports based on traffic types and percentage. At this point, you need not perform any kind traffic control, only identify which kind of traffic goes back and forth on the network and which percentage they occupy in the whole traffic.

I've never performed a QoS project so far, but, just like site survey, the proceed above described is not common as it should.

Anyway, from now on we are going to focus on QoS theory. As said above, traffic needs to be classified in any point of the network. The marking can be done at layer 2 or layer 3 of OSI model. Layer 2 frames has a field called CoS (Class of Service) available in the 802.1p

802.1p is part of 802.1Q. It is expected to be seeing only in trunks links. Access Ports has no way to perform this kind of Marking.

Packets can be marked at Layer 3 OSI model using the ToS field.

As we can see from the figure, ToS field is within the IP Packet and is divided in 8 sub field. The function and interpretation of this sub field will be discussed in detail on the next section.

CoS field has 3 bits and 8 possible values whilst ToS has 8 bits and 64 possible values.

The interpretation of the bits has two definition: IP precedence and DSCP (Differentiate Service Code Point). Well, we are going too deep here.

The fact is,this is all about interpretation. IP Precedence uses only the first three bits whilst DSCP use 6 bits. Two bits are used for congestion.

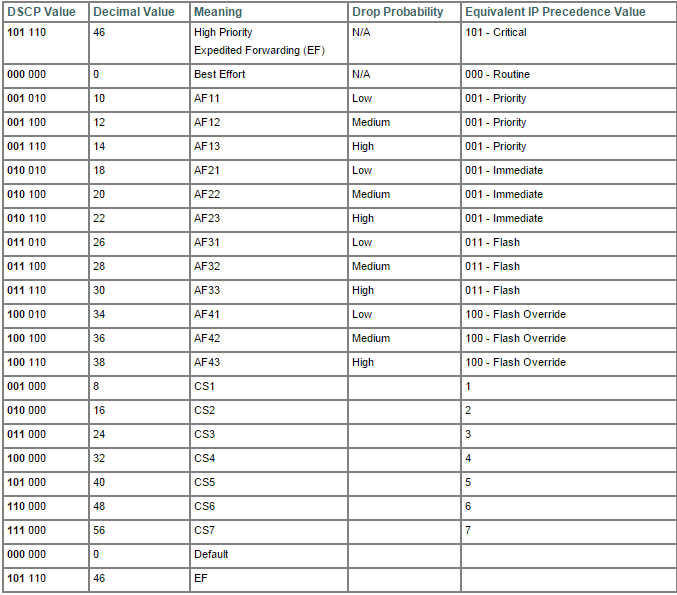

All this is pretty evident when you look at the table bellow:

Basically, this tables summarizes all we need to know about QoS in terms of bits.

We can see on the right that IP Precedence has less classes in which we can mold our traffic. In the other hand, on the left we can see that DSCP is plenty of possibilities. We can spread the traffic out to all this classes.

Despite all this value, DSCP can be divided in basically four classes: Best Effort,Assured Forwarding,Expedited Forwarding and Class Selector. Best Effort means no QoS, Expedited Forwarding means the higher priority a packet can have, Assured Forwarding offers many possibility and Class Selector has a spacial meaning.

In terms of bits Best Effort is represented by 000, Assurance Forwarding is represented by 001 to 100 and Expedited Forwarding is represented by 101. Class Selector represent the situation in which last bits is set to zero thus mapping IP precedence and CoS perfectly. For example, the sequence 011000 could be written as AF 30 or CS3. We can see on the table from the CS1 to CS7. In all this case there were a perfect match between CoS and IP Precedence.

We can see in the table above,on the second column, called Decimal Value. DSCP is actually by a decimal value, this make things easier to white and read.

For a Wireless Engineer, it is not expected to know all this bit's functions. But, I personally don't like to narrow down my knowledge. Then, I want to know everything!

Considering that now you know all the bit's functions, it is time to organize all the information and get familiar with Queues and device configuration.

There are basically three kind of Queues:

-Priority Queue (PQ)

-Custom Queue (CQ)

-Weighted Fair Queue (WFQ)

PQ- One Queue being prioritized among all others Queues. Don't matter what.

CQ - Load balance the traffic among many Queues

WFQ- In its basic form, it allows for prioritization of smaller packets and packets which the ToS field is higher.

R1(config)# policy-map MyPolicy

R1(config-pmap)# class MyClass1

R1(config-pmap-c)# bandwidth percent 20

R1(config-pmap)# class MyClass2

R1(config-pmap-c)# bandwidth percent 30

R1(config-pmap)# class class-default

R1(config-pmap-c)# bandwidth percent 35

R1(config-pmap)# end

First of all, we define a policy-map. After all, it is not enough only create Queue. It is necessary apply those Queues somewhere and the way we do that is through Policy.

Inside the Policy Map we create Classes. Here we named it MyClass1,2,etc. At the end we have a default class. It is somewhat like firewall rules. If something does not fit in any condition then it will fall in default class.

There are yet some variances of those Queues:

-CBWFQ(Class-Based Weighted Fair Queue). It allows you to distribute traffic inside classes.

-LLQ (Low Latency Queue) This a variance of the Queue explained earlier. In this scenario, one Queue works like a Priority Queue,therefore, the amount of traffic allowed is limited. The other Queue is served just like normal CBWFQ.

Lets see, in terms of commands, how things goes:

R1(config)# policy-map MyPolicy

R1(config-pmap)# class MyVoiceClass

R1(config-pmap-c)# priority percent 20

R1(config-pmap)# class MyClass2

R1(config-pmap-c)# bandwidth percent 30

R1(config-pmap)# class class-default

R1(config-pmap-c)# bandwidth percent 35

R1(config-pmap)# end

R1#

When it comes to LLQ, we can see a new command: priority and then the percentage. In this case, it was defined 20 %. It means, this Queue, generally used for Voice, can use up to 20% of the whole bandwidth, but, not more then that. If the traffic arrives to 20%, packets starts to be dropped.

Besides this Queues, we can find Congestion mechanisms. WRED (Weighted Random Early Detection). It is very interesting because it uses a natural mechanism of TCP to control traffic congestion. By dropping some packets and forcing retransmission, TCP handshake reduce the Window ,the amount of traffic is consequently reduced.

To enable this future, just type random-detect at interface configuration level.

Well, it works like a charm for TCP but what about UDP ?. UDP does not use Window. Well, I intend to wright down another article about QoS on Wireless network, just like I did with Multicast. That, we will see about CAC and the mystery of UDP congestion control will be solved or handled at least.

We also have one more type of congestion control called LFI (Link fragmentation and Interleaving) Basically it breaks bigger packets in smaller ones avoiding delay with large packets.

That´s all for now. It is important to say that QoS is an end-to-end deployment. It is an waste of time if you deploy QoS considering all the requirements but in one point,only one single point you do not. Everything you done has gone.

I hope you guys enjoy the article. I am working in Wireless QoS article, after all, this supposed to be a Wireless career path. This supposed to be a self study blog and current I am trying to get my CCNP Wireless.

Flavio Miranda.

No comments:

Post a Comment