Hello all,

In today´s article we are going to start working with two of the most exciting and powerful platform I ever seen: Python and AWS.

Both of it needs no introduction, so let´s getting started:

In Python, we are going to install the AWS interface called Boto. I have no idea from where this name came from, I´ll be looking for it later, but, Boto, the way I know, is an animal found in Brazil, in Amazon forest specifically and is known as a magic animal. Well, the animal itself I know and it is real, it is some kind of dolphin´s cousin, very similar. About the magic, is up to you!

To install it on Python just hit pip install boto:

The next step is getting safety. When you created an account in Amazon console, it is allowed for you to save your credentials in a csv file. If you didn´t yet, we can do it now:

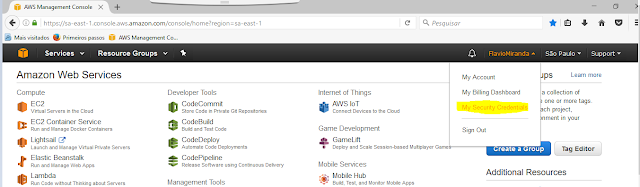

In the AWS console dashboard, go to your account and "My Security Credentials":

You can create a new Access Key.

In my case, I have already done this, I can create it again if necessary. Luckily, I need not.

Once created your Access Key, you can download the file in CSV format. Keep it safe! Now we are going to use it in our Python code.

First of all, create a file in /etc called boto.cfg

nano /etc/boto.cfg

Inside the file, put your Access Key, like this:

Save the file.

Now we are going to explore some AWS Python commands:

Open up a new file and create a script like this on:

This very simple script is able to accomplish something amazing. Let´s explain line by line.

First we need to import boto.

Then, we create a variable we call s3 and give it the value "boto.connect_s3(). This is actually a function that allows us to connect to the AWS console using Python.

Next line we created another variable called bucket. Then, we called the variable defined above, s3, and use the command create_bucket. We also passed as argument the bucket´ss name. As a comment, we are saying that a bucket must have an unique name.

Now that we have created our bucket, we are going to put something inside our bucket. We are going to store something there. The way we do that is:

key = bucket.new_key("Directory/file")

We just created one more variable above and gave it as value the directory and file we would like to store in the Cloud. This can be anything.

Now, we are going to use this variable in conjunction with the command set_contests and we will sent to the Cloud some file.

key.sent_contents_from_filename("Your PC Directory/ Your file")

Lastly, we are going to set permission.

key.set_acl('public-read')

Now, we are going to execute our script and see what is might accomplish in AWS console!

And here we go! We have just created a brand new bucket from command line. Let´s see what´s inside this bucket:

That´s it guys. This is a small step but the power behind it is unmeasurable and our goal here is to explore as much as possible all the possibilities here.

Cheers!

No comments:

Post a Comment